Spatiotemporal Stereo via Visual Spacetime Orientation Analysis

Contributors

- Mikhail Sizintsev

- Richard P. Wildes

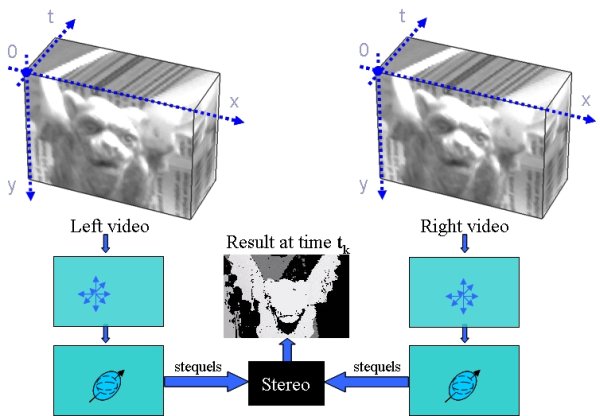

Spatiotemporal stereo. Left and right video streams together with the disparity for the left camera are shown

Spatiotemporal stereo. Left and right video streams together with the disparity for the left camera are shown

Overview

Spatiotemoral stereo is concerned with the recovery of the 3D structure of a dynamic scene from a temporal sequence of multiview images. This project presents a novel method for computing temporally coherent disparity maps from a sequence of binocular images through an integrated consideration of image spacetime structure and without explicit recovery of motion. The approach is based on matching spatiotemporal quadric elements (stequels) between views, as it is shown that this matching primitive provides a natural way to encapsulate both local spatial and temporal structure for disparity estimation.

Empirical evaluation using laboratory-based imagery with ground truth and more typical natural imagery shows that the approach provides considerable benefit in comparison to alternative methods for enforcing temporal coherence in disparity estimation.

Why Spatiotemporal Stereo?

In practical situations, vision-based tasks are performed over time, which implies that visual input can be taken as a temporal sequence of images, e.g., multicamera video sequences rather than still frames. In general, the cameras and scene are in relative motion during a temporally extended capture process. In such situations, it certainly is possible to perform stereo estimation separately at each temporal instance. Ultimately, however, recovery of 3D scene structure must respect dynamic information to ensure that estimates are temporally consistent. Further, in situations where instantaneous multiview matching is ambiguous (e.g., weakly textured surfaces or epipolar aligned pattern structure), dynamic information has potential to resolve correspondence by further constraining possible matches.

Stequel and 3D Orientation Analysis of Spacetime

|

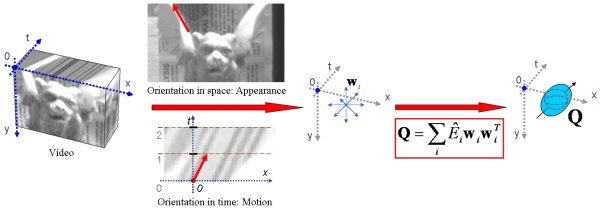

| Construction of the stequel match primitive. At every point of visual spacetime, (x, y, t), 3D orientation analysis is performed via application of oriented energy filters along a set of spanning directions, w. Subsequently, the resulting distribution of oriented energy responses are collapsed in the spatiotemporal quadric, Q, which serves as the local representation of spacetime for matching. |

In dealing with temporal sequences of multicamera images, it is possible to conceptualize of stereo correspondence in terms of image spacetime, (x, y, t). In turn, it is very instructive to characterize local spacetime structure by analyzing its 3D orientation: Orientations in the xy-plane capture spatial appearance (e.g., texture), while orientations that extend into the temporal dimension, t, capture dynamics (e.g., motion). Therefore, a local descriptor that considers orientation in visual spacetime naturally integrates local spatial and temporal characteristics and makes them available for constraining matches.

In our work, we capture local spacetime orientation via application of a bank of 3D, steerable, separable oriented energy filters to input video data [Derpanis and Gryn 2005]. We further collapse the resulting energy distribution by summing unit-direction outerproducts weighted by their energy response. The resulting spatiotemporal quadric element (stequel) captures local orientation structure in a compact fashion and one that is amendable to formulating stereo matching, as it explicitly casts the orientation information in x-y-t coordinates.

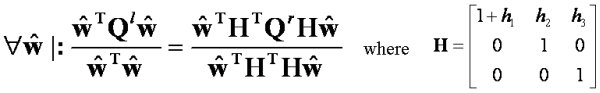

Stequel Matching

|

| Spatiotemporal correspondence constraint for the left and right stequels Q. The constraint is formulated over all possible w, which is a unit vector denoting orientation in xyt space. |

To use the stequel in stereo matching, is necessary to define an effective

match measure between two stequels. This leads to development of an analytic

relationship between corresponding left, Q-l, and right, Q-r, stequels across

a binocular view. The derived relationship is highlighted above and

encompasses weak brightness constancy, binocular rectification, perspective

motion and slanted surfaces. Based on this analytic relationship, the match

measure between two stequels considered for correspondence is taken as the

residual error resulting from solving for H, a simple linear operation. The

overall approach to spatiotemporal stereo is summarized in the following diagram.

Schematic sketch of spatiotemporal stereo estimation using stequels

Schematic sketch of spatiotemporal stereo estimation using stequels

Some Results

We have incorporated stequel matching in both local and global disparity estimation procedures. Further, we empirically have compared our results both qualitatively and quantitatively to alternative approaches. As part of this effort, we have acquired novel binocular video sequences with groundtruth that are available for download. An example of our results is shown below. Notice how the stequel based approach provides overall superior disparity estimates relative to the comparison case, especially in resolving ambiguous matches in weakly and epipolar aligned texture regions, precision of 3D boundary delineation and temporal coherence.

Pixel matching |

Stequel matching |

Additional datasets and results are available at our dataset page.

Related Publications

- M. Sizintsev and R.P. Wildes, Spacetime stereo and 3D flow via binocular spatiotemporal orientation analysis, IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI), to appear, 2014.

- M. Sizintsev and R.P. Wildes, Spatiotemporal stereo and scene flow via stequel matching. IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI) 34 (6), 1206-1219, 2012.

- M. Sizintsev and R.P. Wildes, Spatiotemporal oriented energies for spacetime stereo. In Proceedings of the IEEE Conference on Computer Vision (ICCV), 2011.

- M. Sizintsev and R. P. Wildes, Spatiotemporal stereo via spatiotemporal quadric element (stequel) matching, In proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2009.

- M. Sizintsev and R. P. Wildes, Spatiotemporal stereo via spatiotemporal quadric element (stequel) matching York University Technical Report, CSE-2008-04, 2008. (pdf version)

|